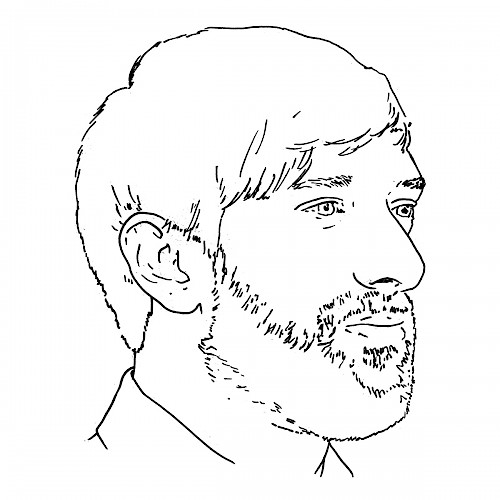

37. Social robots with Bertram Malle

Bertram F. Malle is Professor of Cognitive, Linguistic, and Psychological Sciences and Co-Director of the Humanity-Centered Robotics Initiative at Brown University. Trained in psychology, philosophy, and linguistics at the University of Graz, Austria, he received his Ph.D. in psychology from Stanford University in 1995. He received the Society of Experimental Social Psychology Outstanding Dissertation award in 1995, a National Science Foundation (NSF) CAREER award in 1997, and he is past president of the Society of Philosophy and Psychology. Malle’s research, focuses on social cognition, moral psychology, and human-robot interaction. He has distributed his work in 150 scientific publications and several books. His lab page is http://research.clps.brown.edu/SocCogSci.

Transcription:

Ben:[00:00:02] Welcome to episode thirty seven of the Machine ethics podcast. I'm your host, Ben Byford. And this week, I'm joined by Professor Bertram Malle from the Department of Cognitive, Linguistic and Psychological Sciences, Brown University. We chat about social robots, nurturing robots, appropriate norms for robots in different contexts. Trust, sincerity and deception of social agents, communicating decision making, reasoning and norms, and much, much more. You can find more podcasts at machine dash ethics dot net, you can support the podcast on page on dot com forward slash machine-ethics.net. You can find some of our movements on Twitter and Instagram and if you have questions or want to get in contact email hello@machine-ethics.net. Thanks and hope you enjoy.

Ben:[00:00:53] Thank you for joining me on the podcast, Bertram, if you could just introduce yourself and tell me what you do.

Bertram:[00:00:58] All right. So I'm Bertram Malle. I'm a professor at Brown University in the Department of Cognitive, Linguistic and Psychological Sciences. The longest name of all departments at the university. But it does reflect an attempt to integrate multiple disciplinary perspectives–cognitive science really itself, an interdisciplinary endeavour; psychology, really the classical way of thinking about human mind and behaviour from the emotional, motivational to the cognitive and social levels; and linguistics as the understanding that really language is how we do a lot of our social and cognitive work. And, I've in the last five or six years, begun to take these perspectives of cognitive science and psychology to better understand what this future of humans with robots, humans with AI might look like and that are not that many cognitive scientists and psychologists part of this conversation. So it's actually quite exciting to contribute to that and to have a voice like I have at this moment.

Ben:[00:02:04] Thank you very much. And so we usually start the conversation by just kind of laying down some outline, definitions. So to you, what is A.I.? And I guess by extension, you often cited talking about robotics. What what is AI and what is robots?

Bertram:[00:02:23] So artificial intelligence is a term that has no one definition and that I think appropriately changes its meaning depending on the particular context. So originally we tried to literally build a machine version of what you considered human intelligence that was focussed really on reasoning and what nowadays it's just be one part of intelligence. People realized, well, that is also what has been called emotional intelligence, but maybe an even more important than encompassing social intelligence. And so when we think about artificial intelligence, I think we have to decide, well, which part of these human capacities to be tried to either imitate or replicate or bring into a machine form.

Bertram:[00:03:11] And I think that really is about as far as I want to go, because I wouldn't want to commit to any one version of the term, but rather, if you give me a context that I can tell you which of the intelligent functions are implemented in machine. Now the distinction between AI and robotics also something that is debatable. But when I say machine that it can be any disembodied machine in a computer in the cloud that performs the intelligence functions.

Bertram:[00:03:44] And when I talk about a robot, like many other people, really, I'm thinking about an embodied form that does intelligent processing and can either move around in the world or at least manipulate the physical world. It could be an arm, an industrial arm robot that manufactures things, but it does physical things in the world and changes physical things in the world even more so nowadays, of course, what we call social robots that actually live in both physical and in social spaces and thus have an impact on physical and social events. So that to me is the robotic side.

Bertram:[00:04:24] But of course it seems so trivial to say, but robots need to have artificial intelligence–it's actually quite striking how the robotics field and the artificial intelligence field have not been talking as much to each other. This for an outsider this seemed like a shocking insight. But many computer science departments, at least in the US, have the A.I. researchers and robotics folks, and they have not been actually interacting that much with each other... But I think people begin to realize, well, what robotists built into the robots is artificial intelligence.

Bertram:[00:04:57] And eventually, if artificial intelligence takes on more and more roles in the world, they become also embodied and make, have physical impact on the world. So to me, this is probably going to blur over time.

Ben:[00:05:11] That's a really good point. I hadn't really thought about it. But it's I think it's something that is probably just in the background that those things haven't been kind of, getting a long, or working together in this kind of unified way necessarily historically, which is fascinating. I wonder how that came about? Just to kind of a schism of ideas, maybe.

Bertram:[00:05:31] Well, I also think that robotics was originally. So that are the early robots that, you know, Stanford AI folks built that was meant to implement perception capacities, minimal reasoning and then action capacities. And then there were some other folks who build machines that did some reasoning and Eliza and others that tried to do the turing test. And I think the robotic side became more applied and you got all this industrial robot development and they didn't have to be intelligent. They just had to be very reliable, very good at what they're doing. In fact, they were in cages because they were dangerous. People did not interact with them. It was artificial intelligence that became computers, personal computers that very much interacted with people. Now, not very successfully. Not very well. It was people who had to fix all the problems in the computer. But over time, I think robotics has become more sort of real world, not just industrial. But in the social world. And AI sort of stepped out a little bit out of the computers into our phones, into our environment, into our light switches. And so it's actually now occupies more physical space. And that's why I think they will just be seen as more and more merging. And people in like ten years will say what's even the distinction? And I think that that's a good thing because you shouldn't build robots with a deep understanding of the best artificial intelligence you can put in, that you shouldn't do artificial intelligence without realizing all the physical and social impacts the system has on the world.

Ben:[00:07:08] Yeah. And I guess that leads us nicely to this idea of social robots. So do you have that kind of a working definition of that? And some examples of social robots?

Bertram:[00:07:17] Yeah. So social robots we could define as robots that at least occasionally interact with human beings in such a way that the humans also feel they're interacting with the robot because the robot could do things without people really knowing or noticing or caring about it. But I think it's the sort of mutual awareness, if you will, probably in quotes on the robotic side that makes a social robot really, truly social. That idea of social being interactive over time, having mutual impact and being aware of each other having that impact.

Bertram:[00:07:54] And the examples are increasing basically every day you can think about a nurse assistant in a hospital that helps check and monitor patients and can move around from patient room to patient room and may take notes while they are on rounds. You have possible educating robots that maybe teach children something about nutrition, maybe to review things that a human teacher told them, maybe at an assistant to a teacher, that in the large classrooms that we have may help the teacher to control what's going on. And in each case, I think that there is an interaction between the robot and people. But for the most part, still in this, as I say, assisting function, there are home robots that assist people in a variety of ways that are right now more or less cell phones that move around on rollers. But eventually there will be more interaction and we expect more interaction.

Bertram:[00:08:55] I think people overestimate the interaction they have with Alexa. This is really not much of an interaction, but it definitely feels and begins to feel like one. So I think the numbers in the examples will expand further. And that's also the, you know, the danger that they may expand at a speed that we're not quite prepared for controlling, monitoring, keeping physically and psychologically safe. I'm very excited about the potential of social robots helping with a variety of both professional and also non-professional tasks that currently humans are not covering. But I'm a little worried that plopping them into these social settings without doing careful evaluation, understanding the psychological impact could lead to definitely negative consequences.

Bertram:[00:09:49] The advantage is that robots are not very good right now. So the progress is actually quite a bit slower than the progress that we perceive in A.I.. And then maybe that will give us time to recognize how we can design them in a safe and beneficial way. So it should be can be acceptable members of a community without being overestimated in our capacities and also not underestimated the impact that they have.

Ben:[00:10:15] I guess the the example of the classroom is a good one because you might have kids that take advantage of the pacificity of a robot trying to help assist in some sort of corralling disciplinary way to support the teacher. But if it's too permissive, then, you know, the kids are going to court over it and they're not going to maybe adhere to any of its rules. But then at what point do you step over that line and it becomes too much or it becomes too loud or it says the wrong things. What kinds of things capabilities that have in that environment and what kind of tasks can accomplish and therefore how does that compare to those tasks without, not vicious, but kind of harmful to the children's progression and teaching and that sort of thing.

Bertram:[00:11:09] Right. Without probably having an awareness of that. And I think it's really nice to use the word appropriate or saying the right thing. You know, this leads to one of my favourite topics about this whole field, how you can have these robots in a social space, in a classroom, in a hospital, in a home act in ways that basically align or follow the norms that we have in each of those contexts. And these are sophisticated norms that hold in a classroom, distinct from a home, distinct from a hospital.

Bertram:[00:11:42] I think that the recognition that these robots need to do the right thing and not just in this very abstract way of being fair and just that's very important as a summary statement. But when it comes down to every moment, every minute in a classroom is guided by what's the next thing to do? What's sort of expected of the robot, of the child, of the teacher to do? And if often these expectations, these normative expectations are violated, the attraction will break down. And you, of course, see this when children are not, you know, following the norms and then they become disciplined and maybe then the disciplining is too strong, which is itself a violation. You have escalation. And of course, this could also happen with the robot. If the robot, as you say, is too permissive. Children will take advantage of that, may start to provoke the robot and maybe even damage it. There is evidence that children are playing with the robot and see how much they can get away with actually maybe physically even abusing it. So there has to be a balance, a dance almost between the norms, the expectations and the potential for improving the classroom setting. But this is not in any way an easy task.

Bertram:[00:12:59] We really need to understand the dynamics of the classroom setting before we put a robot into this setting. Yes. If the robot does one thing, namely stands in front of the classroom, tells a story, basically gives a small lesson, and then kids ask a question or two and the robot answers as it can then. That's a nice little setting. And then after five minutes, the robot leaves the classroom. But if you really think about a robot being present in the classroom for a long time, this is a much more complex and much more difficult task. But at the same time, I think by thinking about what that would take, how it might work, it forces us to be more cognizant of the complex dynamics in a classroom. And I've noticed this in my own work, collaborating with computer scientists, roboticists trying to bring a certain function into a robot makes us step back and say, OK, what do we know about this function in the psychological literature? And then something as we say. Well, actually, not enough. We don't know how build this particular intelligence, let's say norm intelligence or norm awareness. How do we build this into a robot without understanding it really well, the way it works in the human mind.

Bertram:[00:14:15] And so I think it's actually a beautiful encouragement to rethink what we know about humans interactions and settings and dynamics and better understand them so that we can then do a better job of maybe adding robots into that equation.

Ben:[00:14:28] Is that cases where there are things that are cases that robots are adding new circumstances so that maybe you can't go away and study them without actually studying them with a robot in the scene and having if not some capabilities, if limited to be able to experiment and get that information about people's reactions and how these social interactions can play out, because a lot of these situations are almost too new and too novel given that these technologies didn't exist before maybe. Was that kind of these norms which kind of encompass all those different circumstances.

Bertram:[00:15:13] Well, I think that whenever you add a relatively powerful and influential factor into a system, especially into a human dynamic social system, there will be discoveries, things that you couldn't anticipate that you didn't think of before. And some of them will be positive and some of them will be challenging, if not possibly harmful. So I think we cannot have the illusion that we can prepare everything and design and build a robot and then just put it in and everything will go smoothly. But rather, we need to have two elements working together. One, to really think ahead of how to design something that has the likelihood of working and in a sense being inserted with good preparation for what that would mean. And humans feel, wow, this is actually pretty well designed. This already fits with my expectations. But then in addition, it has to have a learning function as well, if you will, constrain learning function, that capacity that will allow it to improve and improve in the way we improve, namely by criticism being informed and doing better. And I think that this is both one of the most fascinating and one of the most challenging aspects of the more and more autonomous and complex robots, because we need to prevent them from learning the wrong things. We need to make sure that they're learning the right things. Maybe in the right circumstances from the right people. And this is not an easy question at all to do this. And I think we cannot really be paternalistic in saying we are building the robot with these norms and these values. And that's going to be in the shopping aisle. And that's how people have to behave with the robot, because we don't impose this on families, how they raise their children and what norms they teach their children. What we have is that the families are embedded in communities. The communities are embedded in a town, a town in the nation and in the world. And in each case, there is some corrective where you basically just get feedback that's not okay anymore. And I think robots need to be able to take in that feedback and improve over time. But they can't start with a blank slate.

Bertram:[00:17:29] I think that it would be quite dangerous if we just put powerful learning machines into a new setting and say, hey, it's just going to pick it up. That's not going to happen. Anybody who watched Chappie, not such a great movie, but definitely has the lesson that if you are not careful about from whom the robot learns what it is going to be disastrous. So I think we really need to work together two traditions that are currently, I think, a little clashing. One that just tries to program and design machines in advance for a variety of functions and then they basically execute these programs. That's not gonna be enough because the programs need to be flexible. In the other tradition is now let's just build this massive neural network or this learning machine and just put it into the setting and it will somehow figure out what to do. Yeah, must stick to the to have to work together. And this is true for humans, too. We are prepared for settings. We are biologically prepared. And then socially and cognitively prepared to do a few things. Right. In a new setting. And then we pick up new things. When you and I go to a new culture that's exactly what happens. We're prepared with certain knowledge. Partially, partially correct. Partially incorrect. Then we learn from the first rounds of feedback. We form intentions to do better. And that's what robots need to do, too.

Bertram:[00:18:53] And this is the big challenge to find that balance such that people feel, wow, this is pretty good. And I will make it better. I will contribute to it being better and therefore it will contribute to my lives. Well, my life and our lives are beneficial.

Ben:[00:19:11] I think that's really nice image you leave us with there, because it's almost like we will be compelled to nurture these things in a way. And that will hopefully reflect back on us and how well we've done and that nurturing process, almost like we've got this small dog type thing, which is going to hopefully be able to do useful things for us, but also has some needs and has some growth to be nurtured there. Which presumably might be quite a satisfying thing to achieve as a, as a user of machines, whether it's specifically meant for health or well-being or whether it's just something that you happen to interact with at work, maybe. I like the idea that you're compelled to be nice to it. Almost.

Bertram:[00:20:03] Yeah. I think you're really mentioning two important things here. One is that there's an obligation for us to nurture. And though some of my colleagues get nervous when I use this phrase, but I think in some sense we will need to raise robots and that is an obligation that we have to do this right and maybe to check in with eachother whether we are doing a good job and whether it appropriately reflects what we expect of each other and may sometimes remind us of our own norms and expectations that we sometimes violate. And it becomes almost a not necessarily an ethical standard itself, but it's a well behaving member of a community that is a little bit of a model maybe that will make mistakes occasionally, but doesn't remind us what we actually care about. So that's the obligation side. And the other one is, I think important too, that we will feel good about: teaching, nurturing, raising a robot just like we do feel good about when we can teach somebody: children, our students as academics. Friends. People who come to our country. To our community. That we then build relationships with them and then we benefit from these relationships just as they benefit from the relationship.

Bertram:[00:21:23] I think this is really important. I really do not like when some people call robots slaves. Apart from the absolute inappropriate use of this term in all its connotations, we should really not, you'know. We should know about it. Think about it. Look back on how this happened in this country and still happens in some countries. But using this this concept as the role for a robot, I think is really detrimental. We have to think about it as a machine that will take many different functions, offer an assistive and whether it will ever reach sort of a same level. I don't think so, because that's up to us. I think technologically this is so difficult. It took millions of years to evolve the human mind in all its capacities. I think it would be really an illusion and we think this could be either built or would build itself to be anywhere near that. But we can make it very good in certain domains and for certain functions. And those functions, I think should be assistive and maybe collaborative. And that means the benefits to both. And we get something out of it. We're currently trying to develop a very simple sort of animal shaped robot that might help older adults with a few tasks of everyday living. And we actually are really trying to make this robot require the human justice, the human maybe require the animal to get some things done. The robot shouldn't be just sort of a servant that that does things for the human because it in part also makes the human feel like, yep, AI deficient in this. I can't do this. But if the human feels, oh, this thing helps me and I help it help me, then that's a much more natural and I think beneficial relation.

Ben:[00:23:18] Yeah. I'm just gonna briefly jump back to something you said at the very beginning. You spoke about this idea that there are different types of intelligences, social intelligence, these sorts of things. Do you think that they kind of mutually exclusive in the way that they are constructed, formed, or that these types of different types of intelligences can beget each other? You know, maybe we start with a very... There's this idea of narrow A.I. that has capabilities to enact things or make decisions about a small area. But that broadening it out slightly, we can use some of that knowledge in making some of that decision and some of that social interaction comes into to that capability. Or do you think it would be? Probably this is more of a technical solution issue, but lots of different types of A.I. programs effectively working together to create a larger system. If you have any thoughts on that?

Bertram:[00:24:25] Yeah. I mean, there is definitely a technical question. I would not be the ideal person, but at a functional level, I do have some thoughts. We know from evolution, from development, from cognitive studies that there is something like a hierarchy of cognitive mental functions. And what I mean by hierarchy is not necessarily that you couldn't have the higher one ever without the lower one, but there is definitely a building in development and in use. And you see it in the simple example of and you can build an A.I. that is very, very good at playing Go or playing Chess or doing financial calculations for loans. Now, aside from the fact that the programs are really taken into account some of the social injustices that exist in this domain. But none of these machines need to have social intelligence. But if you build something that has social intelligence, that includes, for example, being aware of norms, recognizing that people are not just moving objects in the physical world, but have intentions, goals, beliefs and feelings, taking that knowledge into account when the system acts, plans its own actions. All of that requires reasoning, memory, logic that you could have on its own, but you couldn't have social intelligence without those. And this may seem... At some level because, of course, human social intelligence builds on all these other things. Memory. And reasoning and and projection into the future.

Bertram:[00:26:03] But I think it's important to recognize that these things that we are trying to build are not the only encapsulated modules, but we add functions on top of previous functions. And that's probably a good thing because that means the previous functions may be simpler. More basic functions have already had some time to mature, to get better, to be tested, evaluated, and then you add a new function and then the uncertainties and variables are more located at that new level. And you don't have to completely go back to the lower levels of memory and fix those problems.

Bertram:[00:26:41] I think this is sometimes what we experience with computers that actually they're not always hierarchically built. You get a new operating system that has new functions and suddenly some previous things don't work anymore and you don't know why. And somehow accidental or intentional is a loss of previous functions. So we, I think, need to take the lesson of evolution, development and the way our cognitive and brain systems really physically, our brain systems work very seriously and build that newer and more complex functions, build on previous ones. And I think people are starting to do this, but they still, I think, are much more successful at doing more encapsulated things. So Alexa does pretty well in basically being a microphone and the loudspeaker, it's connected to a speech processing system. Aleks itself doesn't really do much at all, even though we treat Alexa as if it were an agent so we can do the speech processing in the cloud and it's, you know, done pretty well. But if you really want to have some form of social interactive capacity, you need to build on top of that. But you absolutely need the speech processing to be very good, because if Alexa doesn't recognize that maybe the child is quite inappropriately impolite in demanding something from Alexa and maybe even hears that this child also starts talking with other people in this inappropriately demanding way, then if you want some social intelligence, some normative awareness in your home agent, then the speech processing needs to be very good, very subtle, and then it needs to have the additional function of maybe very gently remind the child or model for the child how one expresses certain requests in appropriate ways. And this is, of course, complex, this question how you do this right without pissing the parents off right there, Alexis trying to, you know, educate the child. So these are very interesting, complex questions, but they need to be built on very, very good speech processing memory in the sense keeping track of patterns of behaviour.

Bertram:[00:28:55] I think computer scientists probably know that. But when we talk about things like the emotion engine in some of the newer robots and I'm not even gonna mention the company that claims that, I think this is both false advertisement and is problematic because many of the basic functions are not even working very well. So then to claim that there's an emotion engine, whatever that exactly means, is really disconcerting. I think we can maybe work towards that, but we have to get a few other things right and reliable first.

Ben:[00:29:31] Well, you have to tell me who that is off-air later on. So if I like this idea that you're having these robots almost kind of proxy parent almost in this idea that maybe it's understood that the child is being negative, kind of blasphemous. You know, in whatever context it is, you know, a different countries and might be different things that they want to put forward as a negative in those norms, which is interesting in itself as well.

Bertram:[00:30:06] Right and you can directly respond and say, Alexia that's not your job to do. And then the system learns that. Or thanks Alexia that was actually a good observation. Do you see in addressing the child that you can really say this differently? Indeed it may depend on the family, right? Yes. Some families don't want to have anything like that in their house. Totally their choice. And other families might make use of it to some extent and others might really integrate this capacity. The system needs to be capable of doing it. The system needs to have some basic norms that it itself will not violate. And whether it takes on the function of being part of this modelling and maybe very slight educating process that I think is a dynamic process that will emerge in that family, in that community or in the design team or the company, wherever these ever more intelligent systems are entering. That will be a sort of micro-culture that will embed the system in different ways.

Bertram:[00:31:12] I think that's really the right way of thinking about it, that we are not building one type of robot or one type of intelligence that then gets sold in the same way. But rather, we are building and designing things that are capable and then which capabilities are being nurtured and highlighted and which ones are shut down. That's going to be a contextual and cultural and maybe sometimes very local decision. And that also has the implication, by the way, that that you don't want to take that robot into a new context without allowing it to learn a lot of things about this context. If this is a robot that works really well on one family with certain functions, you should not suddenly expected to do other things like looking after the child while the parents are gone. If that has not been trained and checked and refined and that's not a good idea at all, or that this robot suddenly takes care of the neighbour or that the robot does shopping for you, the robot may not know anything about the appropriate shopping behaviour unless that was also prepared, refined, and maybe through some shadowing. The system learns how to act appropriately in the supermarket.

Bertram:[00:32:28] But we have good at doing this. Humans are not just the most amazing learning machine we know the universe right now, but they are also an amazing teaching machine. They love to teach. They are really good at it. And I think we should really take advantage of that.

Ben:[00:32:45] So a lot of this comes down to being... Preparing people for the types of capabilities, but also types of nurturing social interaction that will either be coming in the future or thing that we already have. So what kinds of things will build and maybe destroy trust in these environments?

Bertram:[00:33:07] Well, many, many things. And I think trust is this very difficult concept and also phenomenon that we're starting to grapple with. And there are more and more. So the funding agencies realizing, oh, we really need to deal with trust, but then we have not necessarily good measurements of trust. We don't have a very good understanding how I mean, they have a decent understanding of how trust works between humans, but we don't really know whether we can apply that idea of trust and that the manifold of aspects of trust to a human machine interaction. And we've started to do a little bit of work both on the measurement side and the theory side to really think about trust is multidimensional. There's the trust that industrial robots need and get that they can reliably perform a certain function over and over again without much deviation. That's being relied on and we use that term. It's a form of very minimal trust.

Bertram:[00:34:09] Another form of trust is that you trust the system can, is capable, can do certain things that it hasn't yet shown exactly the way you hope it will, but it has enough of that potential, at it's disposition that you let it do something slightly new and a slightly different context. So let's trusting capabilities that isn't just repetition, it's a new version. And then there are what we call moral forms of trust that so far have largely existed only in human relationships. One is sincerity. I trust you. I trust what you say. I trust that your action is genuine. That is a very different thing. That basically means what I see and what I hear stands for the right attitude, desire, goal, belief. And my inference can be made with that feeling of trust and with my ability to maybe take a risk that I trust what you say and therefore follow your advice that I trust your action and therefore put myself in your hands.

Bertram:[00:35:15] And so far, this hasn't really been either necessary or appropriate for robots, but it is starting to, because if robots say things that are really not corresponding to anything inside, the robot says, I'm sad, but there's nothing like a sad state in this machine and that's deceptive. And then we need to figure out whether we should trust this statement or whether we actually realize this is manipulative program...by the machine.

Bertram:[00:35:45] And another aspect of morality is really more broadly what we can call ethical or ethical integrity. Basically, the ability and commitment to follow the norms and important values that we expect of the system, they will be slightly different depending on the role. And that's also true in human cases. But we expect that once certain role, norms and practices are in place, then I can rely that you will stick to those. Then I know that you will respect me, that you will be fair, that you will have my interests in mind. And this is, in a sense, a whole set of smaller capacities that come together in the form of ethical competence or moral competence. And clearly by now, robotic A.I. systems barely have a few small morsels of this. But if you really think that robots can be part of human communities and live in these normative systems that we touched on earlier, they need to have that form of ethical integrity and we need to be able to justifiably trust those capacities. And not just that the robots do the same thing over the reliability, that they can do some things physical otherwise in the future that they are at least not deceptive. We expect them to do a lot of other things that we would consider moral, at least with a small M, not necessarily with a capital M. And I think that we will get to that. And we there are people working on building systems that are justifiably performing these functions and then trust that people have is not necessarily over trust, which sometimes happens now, but is sort of well calibrated trust that I see what it can do and what it cannot do. And I trust it only for those things for which I have evidence that it can do. Whereas now we really don't understand systems very well. And so people either are very sceptical and under trust or they are impressed. And and I think that just because Alexa talks so well, it actually has communicative understanding of our conversation. And then I over trust it in being able to do things that it absolutely is not able to.

Ben:[00:38:03] Yeah. I think those things resonate with me with automated cars, because I think there's a trust equation that happens there. And I think possibly we are overexcited, but also we are over trusting maybe in these sorts of situations as well.

Bertram:[00:38:21] Yeah, they're definitely over trusting and in terms of reliability and capability. But once the cars, for example, talk to us or maybe make some decisions in morally challenging situations when people have worried about trolley dilemmas and other moral dilemmas for cars, though, I think this is actually not the domain we're most worried about and much more worried about these moral dilemmas, social robots settings. But even with cars, there will be such decisions. If then the system tells me something, what it did and why it did or what it will do, I really need to believe that it's sincere. I really need to have evidence that what it says reflects the internal state of the system. And I need to believe that if I buy the car or maybe set a particular parameter a certain way, that it reflects the norms that I expect of it and that it will, in a sense do this. And then I might be responsible for the setting that I put in there. It is my car and I put the setting there, if that's how manufacturers build the cars. And then I will be responsible. But only the system does what I ask them to do.

Bertram:[00:39:33] Maybe we won't have those options. Maybe in fact, we even own our own cars. And maybe that's safer than basically it's the community norms that are studied and put into the cars and everybody has to abide by it. And it doesn't make special allowance for who owns it, but rather the passengers and the pedestrians and other cars. And it has a certain system of balancing them out. That's how the community decides to do it. I think that's probably the way we will resolve some of those moral dilemmas, because for maybe financial, technical and other reasons, having your own car, being the owner might be a concept that relatively soon will be outdated.

Ben:[00:40:12] Yeah, I certainly believe that that might be the case, that we are moving towards a ownership less model where and I think it's just an efficiency thing. If we're talking about efficiency and safety, then stepping into a taxi like situation or a bus situation where it's automated and we can freely go to lots of different places that we need to move towards. But we do that with the most efficient way possible. We're not going to own those vehicles. You know, there's no individual need for that to happen. It's probably detrimental.

Bertram:[00:40:50] Yeah, I absolutely agree it's an efficiency, financial and otherwise, but it is also a responsibility advantage in the sense that the responsibilities clearly defined by either the taxi company or the city that provides them or the manufacturer and not by some combination of owner, user, manufacturer, a policy maker. It's relatively clear the system operates this way and it commits this mistake and maybe very harmful action. Then we know how to fix it. And we know who is responsible for that. But if you own a private car and change the settings, then ultimately you are the one who's responsible. And maybe that's what we are accepting, but I'm not sure that's the necessary best way of doing it. You can choose between two taxi companies, one that has these settings, that other one that has those settings, unless policy makers say no. There is no market for that rebuilding this way. This is our culture. This is how we do it. But then it becomes interesting. If you cross state lines in the US, driving habits and norms change. And so the question is, is the taxi that gets you from Rhode Island to Massachusetts? Is that going to change its norms as it crosses the state line or not? These are actually fascinating questions that I hope for social robots to how subcultures and contexts changes may require that there are adjustments and we do all the time. When we go to Massachusetts you'd have to drive a little more aggressively and have to drive faster. The question is, is that then also a setting that the taxi, the community shared self-driving car will have?

Ben:[00:42:32] But I mean, that would be super interesting. I imagine there'll be some sort of like transparency thing that like. Excuse me. We're moving over county lines or state lines and these things conflict. You know, just to make you aware. Would you like to accept or to learn more about these new norms? Or whatever, maybe.

Bertram:[00:42:58] ...fascinating questions that you've touched on because people are worried about even getting these cars reasonably reliable and not crashing into other cars or get completely confused when it's raining.

Ben:[00:43:09] Yes. Yeah. I mean there is not... Not to belittle those things. That is a very difficult problems to solve. Sure. I was just thinking I had this bizarre idea while you were talking about hope. Kind of the idea that a person might have their own.

Bertram:[00:43:26] Setting or parameter options?

Ben:[00:43:28] Yeah, exactly. Parameter, having their own parameter options and imagining a companion A.I. in my head a bit like Iron Man something like that, who is a single entity that follows you around and can interact with different things.

Ben:[00:43:46] So the car or your cattle or your fridge or your your medical robot, they essentially are dumb and they have capabilities, but they don't have social capabilities. And your assistant has the social capabilities, but also has interaction capabilities to interact with all these different systems and their systems talk back. So you'll be like, well, could you put the kettle on and the kettle would be...they'll be able to do that, but also be able to interact with the car system and work out if there's a problem with the car. I'll to you, there's problems and such with, you know, avoiding this idea that there are lots of different systems and having individual social A.I. or AI's that have these human interactions when it's maybe not necessarily required for everything to be able to talk to you.

Bertram:[00:44:42] Yeah, I agree. But we have to recognize that humans are particularly well tuned to interacting with individual agencies. So if I have my my little A.I. in the head or behind my ear, I might be my better memory or my better conscience. Then I don't think I will want to have that agent plus the car as another agent and observe how they work out conflicts. I think either my agent takes over the car and thus reflects completely what I expect of it, and I know that I can trust it, but I don't have to worry about the car maybe following my agents request or not. Or the car is an agent with which I interact. We're very good at dealing with one agent at a time. We are capable of dealing with multiple agents, but it becomes quite complex and and sometimes reluctant in part because we see the discrepancies and we have to then make decisions which side to take or who has our best interests in mind. So I think that. These agents could merge and could take over the other. But I think that also requires that we have different levels of agents. Because otherwise it becomes a weird identity merging. So my conscience that is partially me or reflecting me on that has its own questions, what exactly my relationship is to that. Maybe it's like my diary. I guess that could be it. But then there's the car agent and does the car agents suddenly become comatose when my agent takes over and do I feel bad about the car agent not being in power anymore? Those are very interesting and almost weird questions that I think we will not be able to avoid because these are human psychological responses that have biologically and culturally evolved to an extent that we will not easily unlearn them. I'm not sure whether we will ever unlearn them, but certainly not in the transition phase. So we need to really be well-informed about these psychological responses.

Bertram:[00:46:43] And this is, I think, where the interdisciplinary commitment is there in theory, but not as much in practice, where the psychological knowledge we have or the knowledge that we have to gain is not yet fully interacting with technology development and the beginning policy development. So I think we will probably have to have some corrective or there will be some bad mistakes made. And then maybe people realize, oh, maybe we need to recognize how people respond to these systems.

Ben:[00:47:17] Is there any instances that you've seen so far of really interesting effects, that a system has reacted on someone?

Ben:[00:47:27] Well, we talked about the Alexa system earlier where Alexa works best, apparently, or at least in the earlier version, when you give it very clear, unpolite or stripped of politeness kinds of commands. But then if your children or the other people in the household start adopting that we're talking with each other, that's clearly not the right way to go. And the psychological mechanism that underlies that is modelling and imitation and mimicry. And this is a powerful mechanism to take advantage of that by having the system model those behaviours that we actually would like to see. And then we without actually that much intentional contribution, we will start doing it as well. And there are at least some plans that, for example, the U.S. military has been toying with to embed robots in platoons of human soldiers, not just or maybe not even solely or primarily to be fighters, but also to be reminders of the normal system, of the rules of engagement, of the values and humanitarian principles that the platoon should obey, because there is evidence that there are more violations than is really acceptable, but because of the loyalty system within a platoon those violations are not being noted, reported and enforced only much later when soldiers are looking back today experiences. Does it come to light what actually those violations were and how many mistakes and sometimes really inhumane things occurred.

Bertram:[00:49:14] So the idea is to put a robot in there, not as the school teacher who says you are not supposed to do it, but merely by being the one that acts in the appropriate manner and might make an adjustment here or there and remind them maybe subtely. And I think that that could be a way of taking advantage of a psychological mechanism of mimicking and...wanting to follow norms, unless we are really distracted through emotion and cognitive overload from following them.

Bertram:[00:49:47] So there's a potential for almost all the psychological mechanisms that could be potential traps, if we think it through they could potential opportunities. To make the system and the human work in harmonious and successful ways that if both problem and that that create costs. But it requires that we think through and observe some of these behaviours either in advance or in early stages and are carefully, constantly evaluating as we deploy them to make sure they really improve and reflect what we expected them to do.

Ben:[00:50:22] I think that's a really fascinating instance. So we're getting towards the end now. Was this something that you wanted to talk about, which we haven't done so yet?

Bertram:[00:50:31] Well, we touched briefly on a function that I think is very important, both from the perspective of understanding machines better, which is really important. We really have fallen behind unstanding these machines and we have to catch up a little bit as humans and as long as we track with it or with them for the transparency of these systems, but also for the social... The natural social interactions we have with these systems. And that's explanations. The system explains to the human user why either it did something or a Google example, what it is about to do. And it gives a reason. And a reason is sometimes merely cognitive informational, but often is justifying–that is, again, moral justification with a small M. It says why it did something is because of this norm that it is obligated to follow, whether it is committed to follow or that it understands you and it are expected to follow. And these explanations and justifications, I think are critical both for building trust at that psychological level.

Bertram:[00:51:43] They are also critical for what people call verification and guarantees that the system reasons in the way that it can read off from its own decision making–why it did something. And it can communicate that to the human user in ways that the user can understand it. I think it's unacceptable that the systems operate at one level. I don't want to be down on neural networks and deep learning has absolutely its important functions. But I would not want the system to reason logically and socially because then basically you have two choices either: the human user will not understand at all why did what it did, or you have to give a fake explanation. You have to give a user an explanation that really isn't reflecting why the system did what it did. Maybe there's a compromise right now. I don't really see successful compromises. So it would actually be better if at least the level of reasoning the system has actual reasons, goals, beliefs, knowledge, norms that guide its actions. And then if the human asks a question, it can provide these reasons. That I think would really help a social interaction with trust with the smoothness of social interaction. And sometimes the human user will give an explanation that the system system needs to learn something. The human user can explain why he or she did something and the system can then take that into account. This is how we coordinate interactions with each other. We get confused, we explain to each other and then we have knowledge and next time we won't be as confused anymore.

Bertram:[00:53:17] So I think that that's a function that is beginning to be on people's radar. There is this XAI explanatory capabilities of A.I., but I think that it needs to take a further step of recognizing that this is a social interactive process that we do. Explanation is not just for checking whether the system works well, very important for that, but it's also a social interactive function that we require of each other in that we will require these systems to the extent that we socially interact with them.

Ben:[00:53:51] Yeah, and like you say, that's really important to trust. But also the legality of the systems and the operation is this systems and all these other things to make it somewhat explainable to to a level which satisfies our norms. But also, you know, what we would expect from one another. I guess. It doesn't need to explain away in detail. It just needs to give us a high level understanding which we can take away, appreciate and move forward with.

Bertram:[00:54:22] Yeah, it's a hugely challenging function because to the extent that we understand explanations, they are sophisticated and sometimes we give one sentence explanations and the audience member fills in the four missing premises. It's just incredible how good we are at that, but part because we have grown up together and it's sort of been cultivated and socialized and we know what to fill in because we have common ground, common knowledge, common norms. And that leads us back to our conversation that requires these machine systems to also grow into this socialised into this social system of norms and common ground and common knowledge. And then they will be able to both understand short explanations and maybe give the appropriate short explanations that don't do with a bog down the conversation, but can recognize the one missing piece that the other one needed to have in order to understand or trust or take the next step.

Ben:[00:55:19] And then that will grow into better conversations and better social interactions. I guess as well.

Bertram:[00:55:24] Yeah.

Ben:[00:55:24] It's interesting. Well, the last question we like to ask on the podcast is in this world of A.I. that we are currently in and moving into, what excites you and what scares you the most.

Bertram:[00:55:40] You know, what scares me actually the most is easier to say is. Naivete on the part of human designers, policymakers and also users to believe that these systems are just gonna do the functions that we would like them to perform without proper planning. Checking, evaluation and constant monitoring whether they really are capable of performing. So I'm actually more afraid of humans being irresponsible, naive and maybe driven by economic concerns to put these things into our world when they're really not ready yet and maybe when we are also not ready.

Bertram:[00:56:16] What excites me about it is really two belong together. One is that it forces us to think about human social interaction, human intelligence, human capacities and maybe the best sides of humans, because we want to build these systems as a reflection of our best sides and try to not build it as a reflection of our darkest sides, which many science fiction movies do. They show robots as a reflection of the worst of humans, when in fact it would be nice to show a few examples of the best of human, a best of our capabilities. So what excites me is that we are reflecting on our human intelligence, social capabilities, also our flaws and how we can avoid them. And as this these systems may reflect, our better sides begin to become members of our communities that will actually make the social life that we live closer to, the positive interactions and the successful ones that we do have, but that also sometimes marred by very negative interactions. And so maybe the balance can shift more towards the world that we would like to have, and that isn't the world that just a few people would like to have, but a world that many and most people would like to have. And really then the community as a whole benefits.

Bertram:[00:57:40] This is what humans are so amazingly good at that we do things. We set practices and norms and institutions in place that are supposed to benefit the large community and not just the individual. We have hierarchies that unfortunately benefit much more the ones on top. But generally we are quite capable of finding ways to benefit the community as a whole. Thus each individual and I think that the AI and robotics could be one of those mechanisms that we put in place to help us get to that place. And maybe that would also lead a little more towards lowering the steepness of our hierarchies and empowering people who currently don't have as much power rather than as it's been the case in the computer revolution that the first ones to get the technology, the ones highest in the power hierarchy, in the economic hierarchy. So I think as designers, we need to be very careful not to build more machines that the elite gets, but rather machines that really help the community at large and maybe precisely the ones that currently are not benefiting as much. That to me would be a really noble and powerful goal. That is absolutely within our reach. But dedicating our technological, psychological and social care to it, Bill, will be difficult. That's there's no doubt about it.

Ben:[00:59:03] Amazing. Thank you. So thanks very much for joining me today on the podcast. If people would like to find out more about your work, follow you. Contact you, all that sort of thing. How did they do that?

Bertram:[00:59:15] Now it's pretty easy. Google Bertram Malle and you'll get to a few web pages. Probably the best one to start is my lab web page that has publications and research topics and there are also other pages of that. So summarize the research that are the department page and the Brown University page. They all are overlapping, so it's it's fairly easy to find. There are a couple of really videos I saw recently. So you can check out one of those. But I think that that's sort of as academics, that's our best voice forward. But I would say this is my my thanks to you. It's also great to sometimes have an opportunity to talk in this kind of forum that I think not only academics will listen to. So thank you to you and thanks to the audience for giving me some time to talk about these exciting topics.

Ben:[01:00:12] Thanks again to Bertram Malle for a fantastic chat.

Ben:[01:00:15] We go into all sorts of subjects there, and the idea of the social robots and the different context norms associated with different environments is something I hadn't really considered too much before and is really great that Bertram could really get to the nub of the situation. I'd be interested to learn more about people in this space working with social robots in different environments like the medical environment, the school environment, things that are really happening now. Something I should seek out maybe get them on the podcast too. Hopefully they're thinking about some of these different contexts and how they affect people. If you'd like to hear more from me, then go to Patreon dot com forward slash machineethics. Thanks again. And see you next time.